The time has come to move away from NHPs and embrace more practical, reproducible, and cost-effective models.

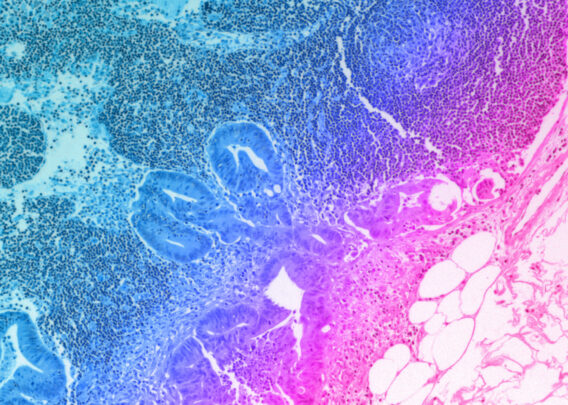

As some of our closest evolutionary relatives, non-human primates (NHPs) like Cynomolgus Macaques, Sabaeus Monkeys, and Rhesus Macaques are well suited to play the role of “human” test subjects in biomedical research. Shared physiological and genetic features mean that NHPs are likely to respond to prospective drugs, for example, in the same way that a human would, enabling researchers to test drugs in human-like subjects before advancing them to clinical trials. In this way, NHPs have served a pivotal role in the development of safe and effective vaccines, medical devices, and blockbuster drugs1.

However, the era of NHP use may be coming to an end—and for good reason, too. In the face of an increasingly constricted supply chain, technological breakthroughs, and mounting evidence pointing to the shortcomings of NHPs as human avatars, it is becoming harder for researchers to justify their continued use.

Are NHPs The Right Models?

NHPs have long been viewed as the gold standard when it comes to approximating human physiology, due mostly to the significant commonalities among primates in both physiology and genetics. However, these models are far from perfect. Phenotypic and genotypic differences can result in drugs appearing safe in NHPs only to be lethal in humans.

The models are further weakened by the possibility that some research NHPs are lab born, while others are sourced from the wild. Aside from international laws that aim to protect populations of endangered species, sourcing wild animals is problematic because it introduces variability into the pool of research subjects.

Wild-born animals can differ from their lab-born counterparts in significant ways. They may have genetic variants that alter drug metabolism. Or, they may be infected with viruses or have altered microbiomes. Together, these variables can lead to inconsistent or non-reproducible results, eroding the robustness of NHP research altogether.

Collectively, these issues raise the question of whether NHPs are truly a gold standard. Among animal models, they may still be the closest approximation to humans that we can find. But, technological advances may mean that we can look beyond animal models for a more human-relevant system.

Stay Up to Date with the Emulate newsletter

Towards A Human-Relevant Research Model

While the supply chain of NHPs gets ever smaller, and as their scientific robustness is called further into question, technological and methodological advancements in tissue modeling may bring the era of NHP-dominated research to an end.

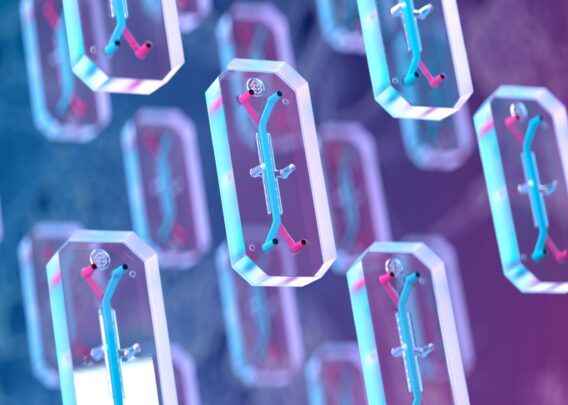

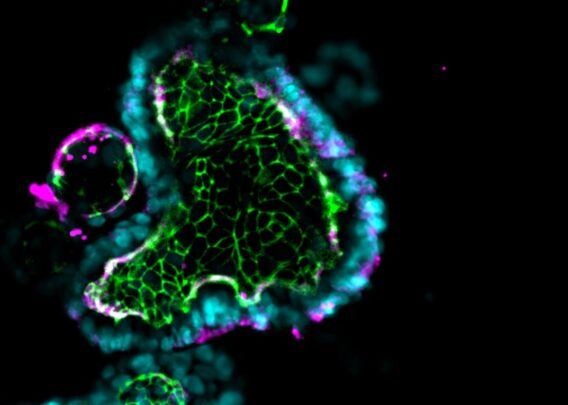

Microfluidic Organ-on-a-Chip technology (Organ-Chips) is an important step in the right direction. Many tissue-specific Organ-Chips have already been developed for various applications, including Liver- and Kidney-Chips for preclinical toxicology screening.

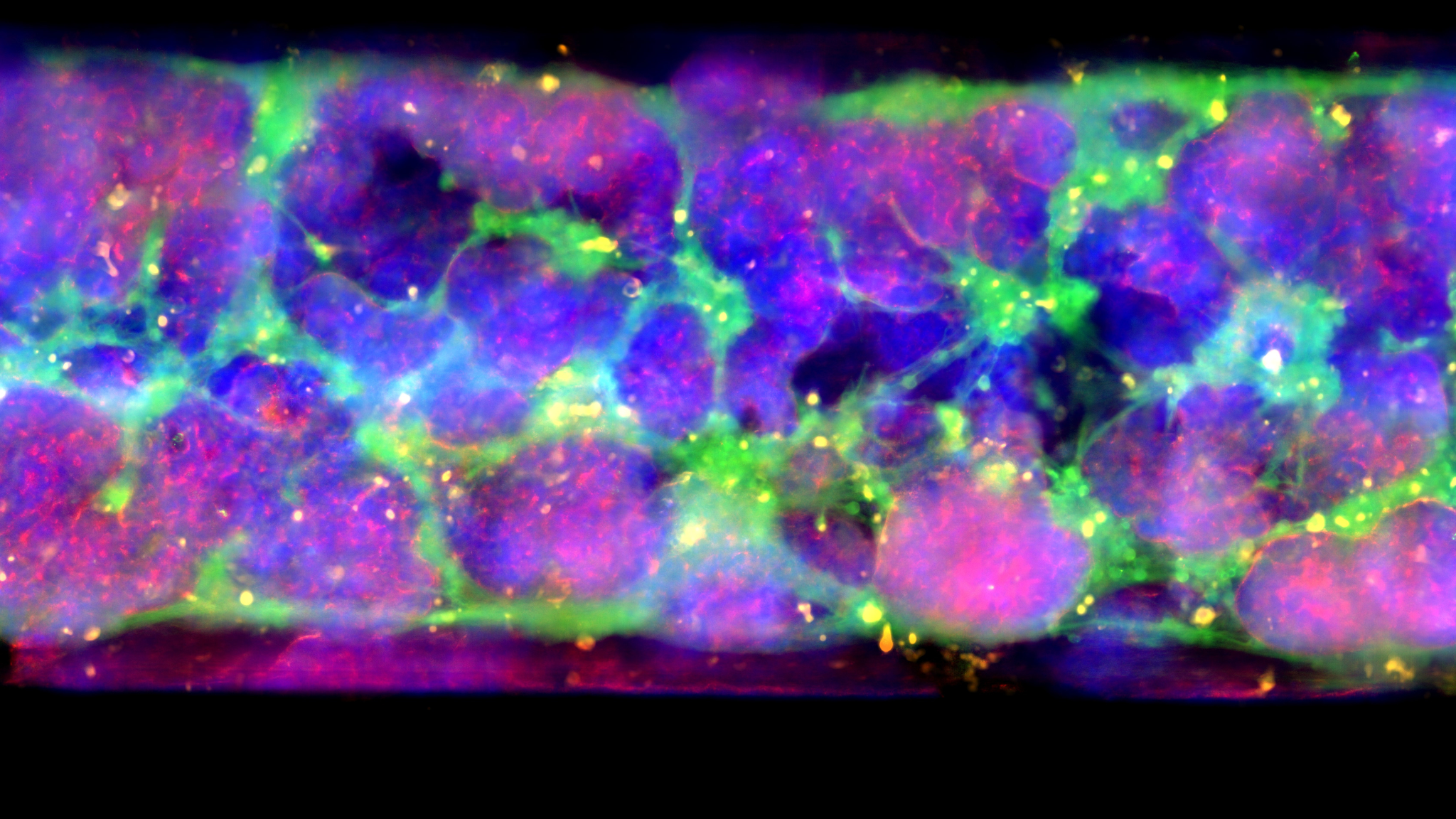

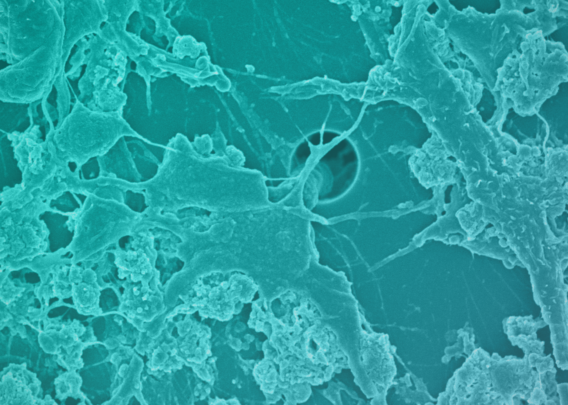

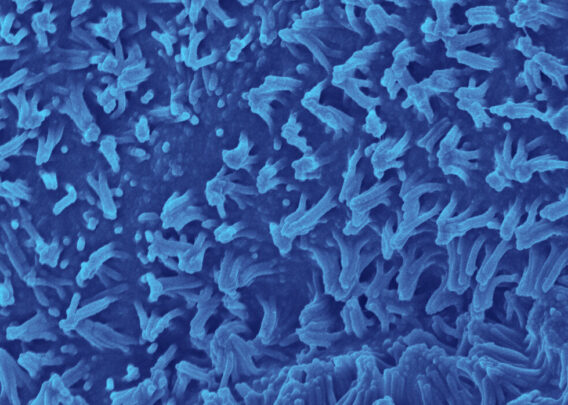

Organ-Chips are small, transparent devices designed to recapitulate the complexity of in vivo microenvironments, ultimately to help coax cells into behaving as they would in the body. This is achieved by culturing cells in the three-dimensional environment micropatterned to emulate tissue architecture from the target organ. Heterogeneous cells are cultured in this environment with tissue-specific extracellular matrix proteins. Microfluidics enable the functional and mechanical simulation of dynamic fluid flow through the tissue. Lastly, mechanical stimuli are provided when necessary by compressing or relaxing vacuum channels within the device (as may be needed when modeling the human lung, for example).

Collectively, these features foster an environment where human cells can be readily observed and are more likely to behave as they would in vivo. As such, Organ-Chips are a highly human-relevant model type that may come to be a new gold standard in preclinical drug screening. To this end, the Emulate human Liver-Chip has already been shown to be capable of detecting hepatotoxic compounds that animal models failed to detect2. Had the Liver-Chip been used in the preclinical screening of the drugs used in the study, their toxic profiles could have been detected before the drugs entered the clinic, and 242 patient deaths could have been prevented.

The FDA recognizes the challenges of the current NHP supply constraints and is working with researchers on how to minimize their use in nonclinical studies. The agency recently released guidance on how labs can mitigate the impacts of NHP shortages by replacing NHPs with alternative models, including non-animal models, whenever feasible3. In addition, the FDA Modernization Act 2.0 authorizes alternatives to NHPs, such as cell-based assays, computer models, and microphysiological systems like Organ-Chips, as acceptable models for drug development4. While Organ-Chips are not yet ready to assume all the roles played by NHPs, the technology is rapidly evolving, and the current NHP shortages should spur scientists to accelerate their adoption of next-generation alternatives where appropriate.

Moving Away from NHPs

As next-generation drug development models become more advanced, researchers should begin to critically examine the performance of the models they use, including NHPs. As many as 90% of drugs that enter human clinical trials fail to make it to market, which is ample indication that current preclinical screening approaches are not providing the necessary information5. Because Organ-Chips start with human tissue, they have the potential to provide more precise data to inform drug development and help companies choose agents that are most likely to succeed in trials.

With the ever-increasing challenges around the use of NHPs creating research bottlenecks, it’s time to reassess longstanding drug development practices. Relying on familiar but flawed approaches is bad for patients, animals, and companies. With technologies like Organ-Chips now available, researchers can lessen their reliance on NHPs while improving their ability to predict human response ahead of clinical trials. Now is the time to move drug development into a human-centric era.

References

- “State of the Science and Future Needs for Nonhuman Primate Model Systems – Meeting 6.” Nationalacademies.org, 2023, www.nationalacademies.org/event/12-01-2022/state-of-the-science-and-future-needs-for-nonhuman-primate-model-systems-meeting-6. Accessed 28 Mar. 2023.

- Ewart, Lorna, et al. “Performance Assessment and Economic Analysis of a Human Liver-Chip for Predictive Toxicology.” Communications Medicine, vol. 2, no. 1, 6 Dec. 2022, pp. 1–16, www.nature.com/articles/s43856-022-00209-1, https://doi.org/10.1038/s43856-022-00209-1.

- Research, Center for Drug Evaluation and. “Nonclinical Considerations for Mitigating Nonhuman Primate Supply Constraints Arising from the COVID-19 Pandemic.” U.S. Food and Drug Administration, 11 May 2022, www.fda.gov/regulatory-information/search-fda-guidance-documents/nonclinical-considerations-mitigating-nonhuman-primate-supply-constraints-arising-covid-19-pandemic. Accessed 29 Mar. 2023.

- Congress, US. “S.5002 — 117th Congress (2021-2022).” Congress.gov, 9 Sept. 2022, www.congress.gov/bill/117th-congress/senate-bill/5002/text.

- Sun, Duxin, et al. “Why 90% of Clinical Drug Development Fails and How to Improve It?” Acta Pharmaceutica Sinica B, vol. 12, no. 7, Feb. 2022, https://doi.org/10.1016/j.apsb.2022.02.002.