It isn’t enough that models identify toxic drugs—they must avoid mistaking safe drugs as dangerous. Read on to learn about the importance of specificity in the preclinical stages of drug development.

Drug-induced liver injury (DILI) has been a persistent threat to drug development for decades. Animals like rats, dogs, and monkeys are meant to be a last line of defense against DILI, catching the toxic effects that drugs could have before they reach humans. Yet, differences between species severely limit these models, and the consequences of this gap are borne out in halted clinical trials and even patient deaths.

Put simply; there is a translational gap between our current preclinical models and the patients who rely on them.

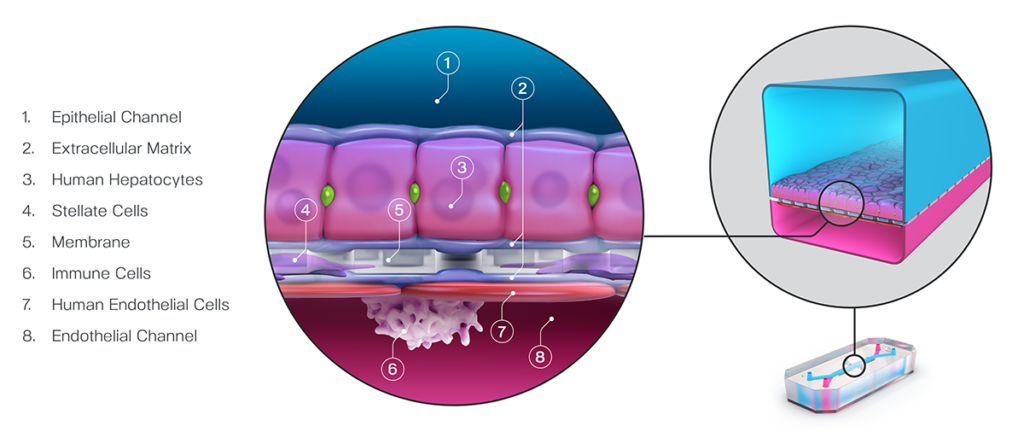

The Emulate Liver-Chip—an advanced, three-dimensional culture system that mimics human liver tissue—was designed to help fill this gap. The Liver-Chip allows scientists to observe potential drug effects on human liver tissue and, in turn, better predict which drug candidates are likely to cause DILI (see Figure 1).

Such a preclinical model could be extremely useful in preventing candidate drugs with a hepatic liability from reaching patients, but exactly how useful depends on how sensitive and specific it is.

Stay Up to Date with the Emulate newsletter

Measuring Preclinical Model Accuracy

In preclinical drug development, a wide range of model systems—including animals, spheroids, and Organ-Chips—are used for decision-making. Researchers rely on these models to help them determine which drug candidates should advance into clinical trials. Whether or not scientists make the right decision depends largely on the quality of the models they use. And that quality is measured as both sensitivity and specificity.

In this context, “sensitivity” describes how often a model successfully identifies a toxic drug candidate as such. So, a model with 100% sensitivity would correctly flag all harmful drug candidates as such. In contrast, “specificity” refers to how accurate a model is in identifying non-toxic drug candidates. A 100%-specific model would never claim that a non-toxic candidate is toxic. It’s important to note that a model can be 100% sensitive without being very specific. For example, an overeager model that calls most candidates toxic may capture all toxic candidates (100% sensitivity) but also mislabel many non-toxic candidates as toxic (mediocre specificity).

In an ideal world, preclinical drug development would use models that are 100% sensitive and 100% specific. Unfortunately, no model is perfect. Approximately 90% of drugs entering clinical trials fail, with many failing due to toxicity issues. This alone suggests that there is a strong need for more accurate decision-making tools.

The give and take of Sensitivity and Specificity

Researchers want preclinical toxicology models with the best sensitivity possible, as higher sensitivity means more successful clinical trials, safer patients, and better economics. However, this cannot come at the cost of failing good drugs. An overly sensitive model with a low threshold for what it considers “toxic” would catch all toxic drugs, but it may also catch drugs that are, in reality, safe and effective in humans. Good drugs are rare, and a lot of effort and investment goes into their development. Even one drug that fails to make it to the clinic can end up costing pharmaceutical companies billions and leave a patient population without treatment. Models should do their utmost to classify non-toxic compounds as such—that is, to have 100% specificity.

But how can drug development insist on perfect specificity when no model is perfect? Fortunately, there is a give-and-take between sensitivity and specificity that model developers can take advantage of: one can be traded for the other.

In decision analysis, sensitivity and specificity can be “dialed in” for the model in question. In most cases, this involves setting a threshold in the analysis of the model’s output. In a recent study published in Communications Medicine, part of Nature Portfolio, the Emulate team set a threshold of 375 on the Liver-Chip’s quantitative output; in the case of hepatic spheroids, an older model system, researchers have set a threshold of 50. In both cases, the higher the thresholds, the more sensitive and less specific the model tends to be. These thresholds were selected precisely to dial the systems into 100% specificity.

This is why Ewart et al.’s findings are so striking. Even while maintaining such a strict specificity, the Liver-Chip achieved a staggering 87% sensitivity. This means that, on top of correctly identifying most of the toxic drugs, the Liver-Chip never misidentified a non-toxic drug in the study as toxic. For drug developers, this means that no good drugs—nor the considerable resources poured into their development—would be wasted. Using models like the Liver-Chip that achieve high sensitivity alongside perfect specificity would allow drug developers to deprioritize potentially dangerous drugs without sacrificing good drugs. In all, this could lead to more productive drug development pipelines, safer drugs progressing to clinical trials, and more patient lives saved.